Hugging Face: Empowering AI with Open-Source Innovation

Hugging Face: Empowering AI with Open-Source Innovation

Sentiment Analysis with Pipeline()

pipeline() function abstracts away the technical complexities involved in using machine learning models, such as loading pre-trained models, tokenizing data, and handling inference.pipeline() function is versatile, supporting many tasks, including text classification, named entity recognition (NER), question answering, text generation, and more.Model with Sentiment Analysis

Using Hugging Face's Transformers library, implementing sentiment analysis becomes incredibly simple. The pipeline() function allows you to load a pre-trained model designed for sentiment analysis and immediately predict the sentiment of a given text. For example, by using a model like distilbert-base-uncased-finetuned-sst-2-english, you can easily classify text as positive or negative with minimal code.

Hugging Face Model Hub

The Hugging Face Model Hub at huggingface.co/models serves as the central platform where developers can browse, download, and share models.

Growing Repository of Pretrained Models: Hugging Face offers a continuously expanding collection of pretrained machine learning models for a variety of tasks, making it easier for developers to apply state-of-the-art models without the need to train them from scratch. It is basically used for things such as natural language processing, computer vision, and more.

(i) pip installation

STEP 1 : Start by creating a virtual environment in your project directory

STEP 2 : Activate Virtual environment on Windows

STEP 3 : Now you’re ready to install 🤗 Transformers with the following command

STEP 4 : For CPU-support only, you can conveniently install 🤗 Transformers and a deep learning library in one line. For example, install 🤗 Transformers and PyTorch with

STEP 5 : 🤗 Transformers and TensorFlow 2.0

STEP 6 : 🤗 Transformers and Flax

STEP 7 : Finally, check if 🤗 Transformers has been properly installed by running the following command. It will download a pretrained model

STEP 8 :Then print out the label and score

(ii) conda installation

STEP 1 : Download hf-env.yml from my GitHub repo https://github.com/ThanushK09/HUGGING-FACE/blob/main/hf-env.yml

STEP 2 : Open your terminal and navigate to the directory where hf-env.yml is saved. You can do this using the cd command

STEP 3 : Once you're in the correct directory, create the conda environment by running

STEP 4 : After creating the environment, activate it by running

EXAMPLE CODE: NLP with Transformers

Sentiment Analysis using Classifier

The code uses the Hugging Face

pipeline for sentiment analysis with the pre-trained model distilbert-base-uncased-finetuned-sst-2-english. It classifies the input text "Hate this." and predicts a NEGATIVE sentiment with a confidence score of 0.9997, indicating strong negative sentiment. The pipeline abstracts away the complexities, allowing easy deployment of models for specific tasks.Batch Predictions

Summarization

The summarization model condenses the input text while retaining key information, reducing redundancy. Here, it extracts the core idea about Hugging Face’s role in open-source AI and its three main resources.

Summarization + Sentiment Analysis

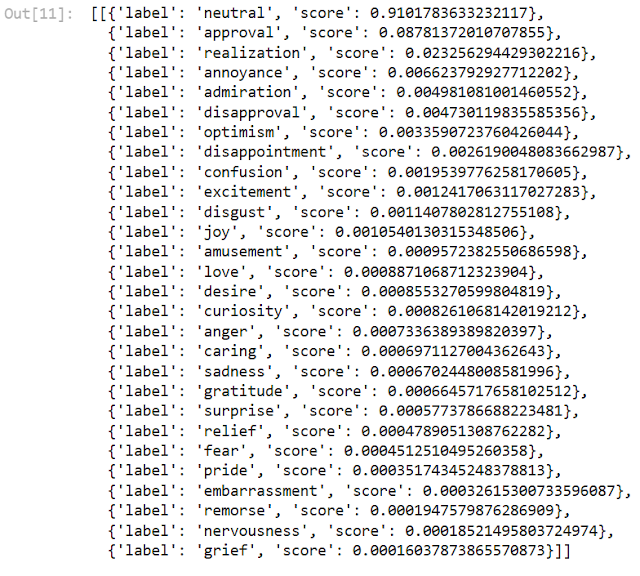

Summarization extracts the key points from a text while minimizing details, which can sometimes reduce emotional intensity. The original text had strong admiration (95.26%), but the summary is classified as mostly neutral (91%), showing a shift in sentiment. This happens because summarization focuses on core information rather than expressive or emotional elements.

Deploy Chatbot UI

Text Sentiment Chatbot

The

top3_text_classes function classifies the sentiment of the input message using the classifier and returns the top 3 sentiment labels with their respective scores. It processes the classifier's output, formats it to display the results clearly by replacing certain characters. The gr.ChatInterface is used to create an interactive Text Sentiment Chatbot, where users can input text, and the chatbot will classify the sentiment as positive, negative, or neutral. The title and description attributes define the chatbot's name and purpose. Finally, demo_sentiment.launch() launches the chatbot, enabling real-time sentiment analysis through a user-friendly interface.Summarizer Chatbot

The Summarizer Chatbot uses a transformer-based model to condense user-provided text while retaining key information. It leverages Gradio's ChatInterface to create an interactive UI where users input text, and the bot returns a concise summary. This makes it useful for quickly extracting essential details from long passages.

Creating Vanilla Chatbot using Hugging-Face

STEP 1 : You can create new spaces as per the requirement by clicking on the "New Space" icon as shown in the below figure.

STEP 3 : Select the space SDK one among the streamlit, gradio, docker or static. Then you can choose one among the available gradio template. Choose the space hardware capacity.

Comments

Post a Comment